Adventures with Clawdbot: From Autonomy to Economic Reality

A deep dive into building an Ai Assistant, the move from rigid automation to fluid agency, and the hard lessons learned about token economics and security.

The New AI Hotness

Unless you’ve been living under a rock in the tech world lately, you’ve likely heard about the latest AI obsession: “Clawdbot” or “Moltbot” as it’s now called to avoid Anthropic’s wrath. It is an open-source framework designed to give an AI model a persistent memory and personality, promising a future where your AI isn’t just a tab in your browser, but a permanent, thinking resident on your hardware.

For the last few months, I’ve been exploring options that go far beyond simple server scripts. I wanted a 24/7 assistant that could 10X my ability to do stuff. A proactive partner that didn’t just wait for a prompt, but actually thought and acted on my behalf. I wanted an agent with a “Brain.”

Beyond the Sentinel: The Agentic Pivot

If you’ve visited my website, you may have read about how I spent considerable time building the Autonomous Homelab Sentinel. That project uses n8n for central orchestration, a web of hard-coded logic that, while effective, felt rigid. It was automation, but it wasn’t agency.

The arrival of Moltbot offered a chance to move “up-stack.” I repurposed an old 2012 Mac Mini to load Ubuntu Server and host “Jennifer”, my resident agent. Unlike the node-based logic of n8n, Jennifer used a SOUL.md file for personality and a MEMORY.md file to maintain a persistent context across every interaction, turning my new mini server into the engine room for a full-blown personal OS.

The “Astonishingly Simple” 10X Assistant

The most striking thing about Jennifer wasn’t just what she could do, but how astonishingly simple it was to achieve. With traditional automation, you spend hours debugging nodes and logic gates. Even using Claude Code with n8n MCP integration and skills, it was still extremely time-consuming. With Jennifer, very technical tasks were just completed without any bother.

Once Moltbot was set up—a process that included a simple Telegram integration, it didn’t feel like working with a “chatbot” anymore. It felt like having a highly intelligent technical assistant on the payroll. I would simply tell her my goal, whether in the terminal, the web UI, or via Telegram, and the work happened behind the scenes:

-

Life Logistics: She integrated with and managed my to-do list, moving fluidly between conversation and structured data.

-

Email Synthesis: I gave her read-only access to my email inbox with instructions to identify anything that she felt she could help with. I soon noticed to-do items showing up in my list based on emails I’d received, without me lifting a finger.

-

Network Sentinel: She monitored my entire network via read-only APIs and alerted me proactively about any issues.

-

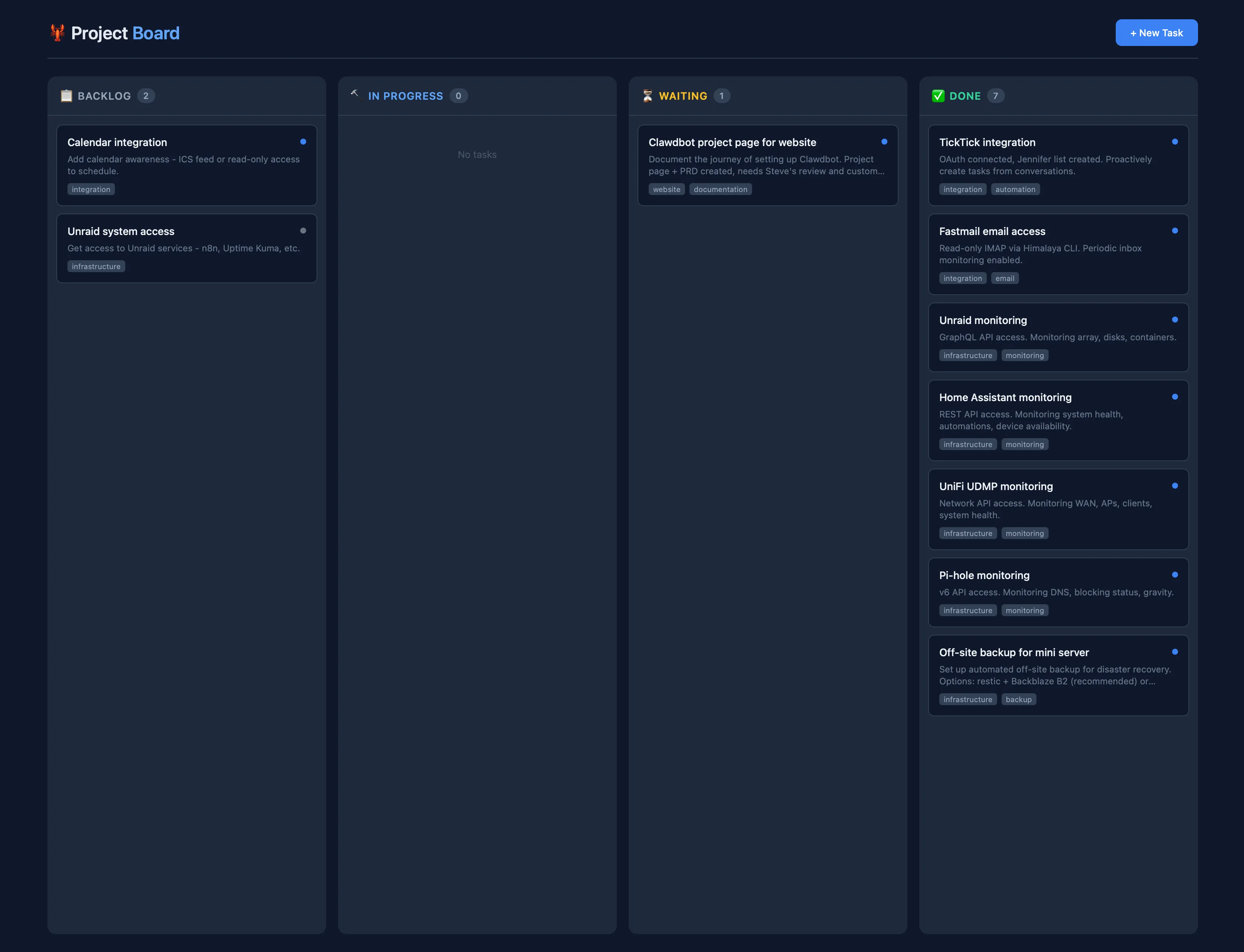

Proactive Engineering: Without me needing to spec out the “how,” Jennifer built and deployed three functional apps on a local web server (accessible anywhere via Tailscale): an Interactive Homelab Dashboard, a Blogging Ideas Management tool, and a Project Management Kanban app.

I didn’t have to write requirements. I didn’t have to put together a PRD. I discussed what I wanted to do and Jennifer just went and did it. Each of these mini “Apps” is well-designed and just works. All of this, from first loading clawdbot to everything integrated was achieved in a few hours.

The Security Shadow: Autonomy vs. Vulnerability

However, this level of power is a double-edged sword. To make Jennifer a “10X” assistant, I needed to give her deep access to my local machine.

There are “gaping holes” in these early agentic frameworks that the community is still grappling with. By giving an AI shell access, you are creating a wide-open gateway into your network. If the framework is compromised, or the agent is “jailbroken” via prompt injection, you aren’t just losing data, you have a persistent, autonomous actor working against you from inside your firewall.

The Economic Wall: Subscriptions vs. Reality

Even if you stomach the security risks, you hit the Agency Tax. Here is the cold reality: Using an agent like Moltbot 24/7 essentially violates the “personal use” spirit of Anthropic’s Pro or Max subscription plans. Those plans are built for human-speed chatting, not high-frequency machine orchestration. To stay above board, you have to move to a Pay-As-You-Go API billing model.

This is when the reality hits, hard. When you can literally see your token costs moving in real-time as your assistant endlessly polls your environment to “stay on top of everything,” the dream becomes a budget item. To be a “Jarvis,” Jennifer has to stay “alive,” and that life is measured in millions of tokens.

You want to give your assistant the best brain possible, but using top-tier models (like Opus 4.5) for every background check is economically ruinous. I asked Jennifer to save money by dynamically up- and down-grading her model use, something Claude Code handles natively, and she enthusiastically agreed before promptly losing connection to all models entirely. Took about 20 mins to get that back up and running again.

Moving Up-Stack: The Current State

I’ve had to concede: until costs drop by an order of magnitude and security frameworks catch up, the 24/7 autonomous agent is a luxury I can’t justify. If an agent can’t afford to “listen” proactively, it stops being an agent and goes back to being a high-latency chatbot.

I’ve shifted back from Agency to Tooling. I now use Claude Code on the Ubuntu Server, a human-triggered, high-reasoning engine. My monitors are back to being efficient, silent, and free cron jobs. It lacks Jennifer’s “human-speak,” but it is Strategically Realistic.

The Jennifer experiment was a preview of the next age of product management. The tech is ready; the economics and security are simply waiting to catch up. I cannot wait until we get there.